As a systems technologist, I’ve spent much of my career watching brilliant database teams wrestle with a simple but stubborn problem: memory. In-memory databases (IMDBs) promised transformative performance by eliminating disk latency, but they’ve always been hemmed in by the hard limits of DRAM—both its cost and its capacity ceiling.

The workaround for the last decade has been sharding: breaking a database into pieces and spreading it across many servers. It worked, but at a cost. Every shard introduces latency, redundancy, and operational overhead. We’ve all seen query latencies balloon, replicas multiply, and teams burn cycles on rebalancing and failover strategies.

Compute Express Link (CXL) changes this picture completely. By allowing memory to be expanded and pooled beyond the socket—at terabyte and even hundred-terabyte scale per node—CXL makes the original promise of IMDBs achievable again. Not in theory. In practice.

Let me explain why this matters for you as a database architect or data scientist, and why I believe CXL will reshape the foundation of your systems in the coming years.

Sharding Was Always a Tax, Not a Strategy

We’ve accepted sharding as “the only way to scale,” but it’s really been a tax:

- Latency penalty: Adding shards inflates query latency by 10–30% per shard! A single-node 10ms query can balloon to 100ms or more.

- Replication burden: Metadata and hot datasets must be copied across nodes, consuming both memory and bandwidth.

- Operational friction: Re-sharding, balancing, and recovery add complexity that distracts from building value.

With CXL, that tax disappears. Instead of sharding a 20TB financial risk analytics workload across five servers, you can run it on a single node with 2 TB DRAM + 18 TB of CXL.mem. Scale that up with a disaggregated CXL chassis, and a single-node system can now hold 50 TB, even 100 TB of memory. That’s enough to consolidate entire datasets that today require double-digit clusters.

For you, the architect, this means cleaner designs, fewer moving parts, and drastically lower latency.

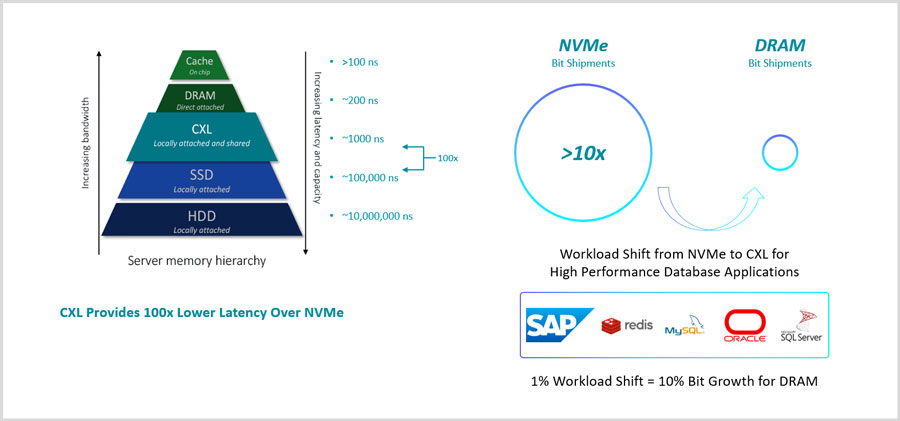

A True Tiered Memory Architecture

In practice, most of your data isn’t hot. You probably see the same Pareto curve we do: 20% of the dataset accounts for 80% of accesses. But until now, you’ve had no middle ground between DRAM and SSD. Either you paid for expensive DRAM, or you suffered the latency cliff of SSD (tens of microseconds to milliseconds).

CXL gives us a third tier:

- Hot data in DRAM (80–120 ns, ~200 GB/s/socket).

- Warm and cold data in CXL.mem (200–800 ns, up to 256 GB/s/node).

- Archival data on SSD (50–100 µs latency, GB/s bandwidth).

This lets you design systems where the entire dataset lives in memory—hot in DRAM, long-tail in CXL—without falling back to disk. For data scientists, this means your training sets, feature stores, or graph structures don’t spill to SSD mid-query. For architects, it means predictable performance across workloads.

Learn more in our LIQID IMDB CXL Solution Brief.

Convergence of Databases and AI

One of the most exciting implications of CXL is that the boundary between databases and AI systems begins to dissolve. Let me give you a few concrete examples:

- Vector databases (Milvus, Pinecone): With IVF indexes in DRAM and partitions in CXL.mem, a single node can store 40–100 TB of embeddings. Latency drops from 100ms (sharded) to ~10ms. That changes how you design retrieval-augmented generation (RAG) pipelines.

- Graph databases: Fraud detection graphs with billions of nodes and edges no longer spill to SSD. Hot fraud rings sit in DRAM, long-tail edges in CXL.mem. What used to take seconds now completes in under 1 ms.

- Hybrid OLTP + AI: Recommender engines need both transaction support and inference against embeddings. With CXL, both workloads share the same memory pool—no expensive PCIe data copies, no duplicate storage.

For data scientists, this is transformative: your embeddings, your KV caches, your training sets, your graph structures—everything can live in memory, side by side. For architects, it means designing systems where the database and the model serve from the same memory substrate.

Analytics Without the I/O Bottleneck

Consider a TPC-H style analytic workload. Scanning a 1 TB fact table fromSSD takes ~30 seconds. With CXL.mem, the same scan takes ~3 seconds, nearly indistinguishable from DRAM at ~2 seconds.

That’s not an incremental gain—that’s an order-of-magnitude reduction that flips the bottleneck from I/O to compute. Your joins, group-bys, and hash lookups stop thrashing disks and run entirely in memory.

For database architects, this means you can stop designing around the slowest layer. For data scientists, it means iterative analysis and model training loops that once crawled now feel interactive.

Toward Persistent CXL

The first wave of CXL devices focuses on expansion, but persistence is on the horizon. Imagine checkpointing your IMDB directly into persistent CXL memory. No SSD logs, no multi-minute recovery times. Just a restart in seconds.

When your systems power risk analytics, fraud detection, or trading engines, that recovery time matters. And with persistent CXL, you’ll be able to design architectures that combine speed, resilience, and simplicity.

Why This Matters Now

As technology leaders, we’re trained to filter hype from reality. So let me be clear: this is real. Every major CPU, GPU, and memory vendor is building around CXL. Hyperscalers are already deploying it at scale.

The strategic implications for you are immediate:

- Revisit scale-up assumptions. You can now build single-node IMDBs with tens of terabytes—and soon 100 TB—of coherent memory.

- Converge your stacks. Databases and AI systems no longer need separate infrastructure. Start designing unified memory pools.

- Rethink TCO. Sharding inflates costs. Consolidating into fewer, larger CXL-rich nodes lowers both hardware and operational expenses.

- Prepare for persistence. The next wave of CXL will collapse the gap between in-memory performance and durable storage.

Closing Thoughts

In-memory databases have always represented the purest form of performance—but they were bounded by DRAM. We stretched the model with sharding, but at the cost of latency and complexity.

CXL removes those boundaries. You can now build IMDBs at scales once unthinkable—50 TB, 100 TB, and beyond per node. You can collapse database and AI infrastructure into a single coherent memory fabric. And you can deliver performance that keeps your teams focused on innovation, not workarounds.

For database architects and data scientists, the message is clear: CXL isn’t just another interconnect. It is the new foundation for memory-centric computing, and IMDBs are among the first domains where its impact will be transformative. Those who embrace it early will not just cut costs—they will unlock entirely new classes of workloads and real-time intelligence that were previously impossible.

The age of memory disaggregation has arrived, and IMDBs are about to be come one of the killer applications. The only question is how quickly you embrace it.

Take the Next Step Toward Memory-Centric Design

Contact us to speak about our solution.

Schedule a demo to see our solution in action.